My thesis: creating and evaluating an audio detection interface for musical play and learning with low-cost at home musical instruments.

Background Music-making benefits children

While there are varied approaches to teaching children music, there is a common view that children benefit from actively participating in music creation. Manipulating real-life instruments introduces sensory and physical task parameters vital to motor learning. However, there are many barriers to early childhood music education for all children. These barriers include finding suitable programs, costs, travel constraints, and time. For children with motor disabilities, these barriers are compounded as they are more likely to live in low-income households and less likely to attend regulated daycare or preschool settings where early music education may be offered. This suggests the need for low-cost, home-based approaches to expand MMT for children with disabilities.

A few “mixed reality” technologies that detect and respond to audio signals from real-life, pitched instruments (e.g. piano, violin, guitar) have recently emerged. However, in early childhood, non-pitched percussion instruments are more prominent and appropriate for developing motor abilities. Non-pitched percussion instruments (e.g. maracas, tambourines) are more challenging to detect and distinguish due to overlaps in frequency bands and greater variation in sound quality and play style. Bootle Band, a mixed reality music game developed in the PEARL lab for motor therapy has the opportunity to detect such instruments but is observed to not generalize well to families of instruments. Frequent calibrations are required with this technology when: (1) a user changes game-play location (i.e. different ambient background noise); and (2) a user decides to play with an instrument that is not calibrated.

Our long-term goal is to create and evaluate an interactive technology that responds to and guides families in high quality MMT with low-cost musical instruments at home.

Our questions are:

- How well does the audio detection interface capture musical inputs?

- Does the audio detection interface promote an engaging user experience for children with and without physical disabilities?

Our objectives are:

- Create and evaluate a model for detecting and classifying families of instruments without bias to specific brands and materials.

- Investigate and devise approaches for determining similarity between a child’s musical input against a target steady beat and rhythm.

Methods Developing and Evaluating Algorithms

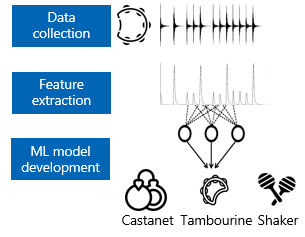

Musical Instrument Classifier

We have cultivated an in lab database containing audio samples of a plethora of non-pitched percussion instruments (i.e. tambourines, shakers, castanets). We have performed feature extraction and created a machine learning model using supervise learning techniques.

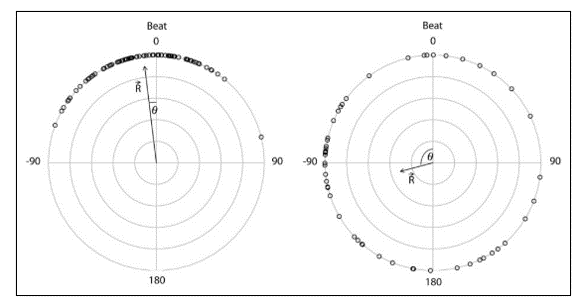

Steady Beat and Rhythm Scoring

We have used high frequency content for onset detection. We are working on using circular statistics and rhythm similarity algorithms to generate a score for the reproduction of a steady beat or rhythm.

Usability Testing (Evaluation)

Using video games, we are testing our system with children and music experts in order to validate our design of an audio detection interface which: (1) classifies instruments accurately and provides a more enjoyable gaming experience than a traditional touch screen, and (2), scores rhythm and steady beat production like a music teacher would.

Results to Date Oct 2020

Instrument Classifier Ready for Testing Achieves 94% accuracy

Onset Detection Algorithm Deployed Using high frequency content

Next Steps:

- Conduct usability study with children to answer the question if the audio detection interface promote an engaging user experience for children with and without disabilities.

- Develop steady beat and rhythm algorithms.

- Evaluate steady beat and rhythm algorithms with music experts.

Acknowledgements PEARL Lab | Bloorview Research Institute | University of Toronto

I would like to thank the PEARL lab and Professor Elaine Biddiss for accepting me as a Masters student. This work would not be possible without the consistent support from Ajmal Khan and Alexander Hodge. I would also like to thank our music experts Chris Donnelly and Catherine Mitro for their continued support.

Thank you to the Bloorview Research Institute and the Biomedical Department at the University of Toronto for giving me the chance to conduct this thesis work.